How augmented reality gives one doctor surgical 'superpowers'

April 09, 2018

by Lisa Chamoff, Contributing Reporter

The sun has just started to rise behind the thick clouds blanketing upper Manhattan on a recent morning as Dr. Joshua Bederson begins his day at Mount Sinai.

As the Mount Sinai Health System’s head of neurosurgery, Bederson is the captain of the figurative aircraft that is the department of 30 full-time faculty members, 40 residents and 70 advanced practitioners located across the five boroughs of New York City. It’s a metaphor that the amateur pilot uses often to describe his early adoption of the cutting-edge augmented and virtual reality technology that has revolutionized the complex brain surgeries Bederson and his colleagues perform, helping them plan the approach and avoid damaging critical structures adjacent to the tumors they remove and the aneurysms they repair.

The new technology includes a program called Surgical Theater, which uses CT and MR scans to create a 3-D reconstruction of the patient’s anatomy, and navigation software from Brainlab, giving Bederson what feel like superpowers to see through tissues and around corners while piloting his instruments through the brain.

“In aviation, when you’re flying in clouds, you don’t see the mountain or the runway, but you take information from the navigation equipment,” explains Bederson, who has the calm demeanor of someone you would want in the cockpit — or wielding a scalpel for several hours. “You overlay that on your knowledge of the local geography, which is similar to your knowledge of neuroanatomy. And you go back and forth between the instruments, the maps and so on, in an integrative process. In very advanced airplanes, some of that information is projected onto the windscreen in a heads-up display. The advantage of that is you don’t need to stop flying the plane in order to get the information.”

Surgeons at Mount Sinai can also use the DICOM images and Surgical Theater technology to create 360-degree virtual models of their patients’ brains and use an Oculus headset to do a “run through” of the surgery, explains Parth Kamdar, a VR program lead at Surgical Theater who supports the utilization of the technology at the hospital.

“Some doctors say they feel like they already did the surgery,” Kamdar says.

Meeting of the minds

After some work in the quiet of his office, Bederson’s morning begins in earnest with a 7 a.m. educational conference to go over the department’s current cases, including the three surgeries on his plate that day, all resections of pituitary gland tumors pressing on each patient’s optic nerve.

One of the patients has a particularly large pituitary adenoma. As they view the patient’s MRI and CT scans on a screen in the dark conference room, Dr. Kalmon Post, former chairman of the neurosurgery department at Mount Sinai, and the current program director for the neurosurgery residency program, mentions that he recalls hearing at a recent conference about a similar case, in which two surgeons operated on the patient at the same time from different angles. Unfortunately, there ended up being no advantage to the unusual method.

Bederson does have an edge in the large tumor resection scheduled for the afternoon — the newest segmented reality software from Brainlab, coupled with a heads-up display linked to the Zeiss microscope, along with the Surgical Theater augmented reality software.

Before making his way to the OR suite, Bederson stops in the neuro intensive care unit (ICU) to visit three patients who had undergone surgery earlier in the week. On the way, Bederson motions to a vacant building that can be glimpsed out the window of the hospital skyway, which will be the eventual new home of the neuro ICU.

The current neuro ICU is experiencing an overflow problem, and patients also need to be transported to the basement for all their scans, which adds to the time of the exams. The new suite will conveniently have scan rooms built in.

Avoiding ‘no-fly zones’

Bederson’s first surgery of the day, a transnasal endoscopic tumor resection, goes smoothly, with the bulk of the procedure completed within an hour using the Surgical Theater augmented reality technology and Brainlab navigation.

For the second, more complex, surgery, Bederson comes in after the patient is sedated to consult on her positioning based on the heads-up display projection of the tumor into the microscope.

After part of the tumor is removed via a transnasal endoscopic procedure, Bederson comes in about two hours later to start his part of the operation, a sublabial microscopic and endoscopic approach in which he accesses the tumor through the patient’s upper lip.

As Bederson begins the procedure, he observes the power of integrating Brainlab software into the Zeiss operating microscope, bringing GPS-like navigation that tracks the microscope’s focus point relative to the patient's anatomy and then projects an augmented reality view into the heads-up display in his vision of the surgical field.

At first, Bederson is not able to see the patient's carotid artery through the microscope. Although the artery is well visualized on the preoperative MR, it is hidden by the tumor.

The location of the artery – considered a dangerous "no-fly zone" – is visible in the form of a dotted red outline on Bederson's heads-up display and on the high-resolution OR monitor. The monitor can be seen by OR staff and a few students from the Icahn School of Medicine who have come to observe the hours-long surgery. The red lines show the position of the artery, tracking its position when the microscope is moved. As the surgery gets closer to it, the anatomy is confirmed with an intraoperative ultrasound, permitting safe removal of the tumor from around the artery.

Despite the advantages of the technology, Bederson says the surgery is not as successful as he’d hoped it would be. Most of the tumor, which is very vascular and fibrous, is located up high in the patient’s brain, and will likely require a more invasive craniotomy.

Looking to the future

Bederson and the Mount Sinai network are on the cutting edge when it comes to surgical technology, and Mount Sinai’s neurosurgery department is also one of the first hospitals to take things to the next level by using the new KINEVO 900 neurosurgical visualization microscope from Zeiss.

The device – which Bederson tested last October and two of which were purchased with the help of a donation from a former patient – feeds optical, navigation and simulation information into a 55-inch 4K 3-D monitor.

“Instead of looking through eyepieces, I’m looking at the video image obtained by the KINEVO,” Bederson explains. “The quality of the heads-up display information that’s injected into the eyepieces is limited because the eyepieces are optimized for optical information provided by the microscope. They’re not well suited for digital information. The information is pixelated and it’s really not as high-quality as it could be. So, we’re actually dumbing down the quality of the digital information to facilitate microscope integration.

“Now, because everything’s a video feed, we can increase the quality of that digital information.”

The KINEVO 900 also allows for surgeon-controlled robotic movement of the microscope, so instead of Bederson having to stop what he’s doing and move the microscope, he can identify a point in the patient and the microscope will only move in relation to that focal point.

Going forward, there will also be image-controlled robotic movement.

“I can identify areas in the Brainlab images that I want to navigate to or stay away from, and we’ll program the microscope to take me through the sequence of places that I’ve identified preoperatively,” Bederson says. “[We] … can either use what we’re seeing through the video feed or we can look at tractography from MRI scans. We can look at cranial nerves. We can say navigate away from this blood vessel that we can’t see yet, but we don’t want to get too close to it. Presumably, we’re going to get more control of what we do based on additional information.”

The ultimate goal of the technology – an advanced airplane, so to speak – is to make Bederson’s job less daunting.

“What we want to do is make it easier for the surgeon, to reduce the workload and bring some of this navigation and simulated information into the field of view in a way that allows us to keep flying the plane while getting that information,” Bederson says. “That’s really the next step of integration.”

As the Mount Sinai Health System’s head of neurosurgery, Bederson is the captain of the figurative aircraft that is the department of 30 full-time faculty members, 40 residents and 70 advanced practitioners located across the five boroughs of New York City. It’s a metaphor that the amateur pilot uses often to describe his early adoption of the cutting-edge augmented and virtual reality technology that has revolutionized the complex brain surgeries Bederson and his colleagues perform, helping them plan the approach and avoid damaging critical structures adjacent to the tumors they remove and the aneurysms they repair.

The new technology includes a program called Surgical Theater, which uses CT and MR scans to create a 3-D reconstruction of the patient’s anatomy, and navigation software from Brainlab, giving Bederson what feel like superpowers to see through tissues and around corners while piloting his instruments through the brain.

“In aviation, when you’re flying in clouds, you don’t see the mountain or the runway, but you take information from the navigation equipment,” explains Bederson, who has the calm demeanor of someone you would want in the cockpit — or wielding a scalpel for several hours. “You overlay that on your knowledge of the local geography, which is similar to your knowledge of neuroanatomy. And you go back and forth between the instruments, the maps and so on, in an integrative process. In very advanced airplanes, some of that information is projected onto the windscreen in a heads-up display. The advantage of that is you don’t need to stop flying the plane in order to get the information.”

Surgeons at Mount Sinai can also use the DICOM images and Surgical Theater technology to create 360-degree virtual models of their patients’ brains and use an Oculus headset to do a “run through” of the surgery, explains Parth Kamdar, a VR program lead at Surgical Theater who supports the utilization of the technology at the hospital.

“Some doctors say they feel like they already did the surgery,” Kamdar says.

Meeting of the minds

After some work in the quiet of his office, Bederson’s morning begins in earnest with a 7 a.m. educational conference to go over the department’s current cases, including the three surgeries on his plate that day, all resections of pituitary gland tumors pressing on each patient’s optic nerve.

One of the patients has a particularly large pituitary adenoma. As they view the patient’s MRI and CT scans on a screen in the dark conference room, Dr. Kalmon Post, former chairman of the neurosurgery department at Mount Sinai, and the current program director for the neurosurgery residency program, mentions that he recalls hearing at a recent conference about a similar case, in which two surgeons operated on the patient at the same time from different angles. Unfortunately, there ended up being no advantage to the unusual method.

Bederson does have an edge in the large tumor resection scheduled for the afternoon — the newest segmented reality software from Brainlab, coupled with a heads-up display linked to the Zeiss microscope, along with the Surgical Theater augmented reality software.

Before making his way to the OR suite, Bederson stops in the neuro intensive care unit (ICU) to visit three patients who had undergone surgery earlier in the week. On the way, Bederson motions to a vacant building that can be glimpsed out the window of the hospital skyway, which will be the eventual new home of the neuro ICU.

The current neuro ICU is experiencing an overflow problem, and patients also need to be transported to the basement for all their scans, which adds to the time of the exams. The new suite will conveniently have scan rooms built in.

Avoiding ‘no-fly zones’

Bederson’s first surgery of the day, a transnasal endoscopic tumor resection, goes smoothly, with the bulk of the procedure completed within an hour using the Surgical Theater augmented reality technology and Brainlab navigation.

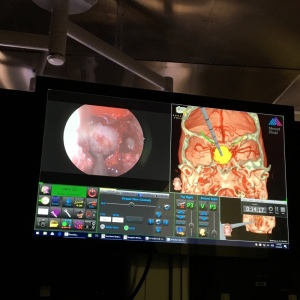

A procedure at Mount Sinai using the Surgical Theater

augmented reality technology and Brainlab navigation.

augmented reality technology and Brainlab navigation.

After part of the tumor is removed via a transnasal endoscopic procedure, Bederson comes in about two hours later to start his part of the operation, a sublabial microscopic and endoscopic approach in which he accesses the tumor through the patient’s upper lip.

As Bederson begins the procedure, he observes the power of integrating Brainlab software into the Zeiss operating microscope, bringing GPS-like navigation that tracks the microscope’s focus point relative to the patient's anatomy and then projects an augmented reality view into the heads-up display in his vision of the surgical field.

At first, Bederson is not able to see the patient's carotid artery through the microscope. Although the artery is well visualized on the preoperative MR, it is hidden by the tumor.

The location of the artery – considered a dangerous "no-fly zone" – is visible in the form of a dotted red outline on Bederson's heads-up display and on the high-resolution OR monitor. The monitor can be seen by OR staff and a few students from the Icahn School of Medicine who have come to observe the hours-long surgery. The red lines show the position of the artery, tracking its position when the microscope is moved. As the surgery gets closer to it, the anatomy is confirmed with an intraoperative ultrasound, permitting safe removal of the tumor from around the artery.

Despite the advantages of the technology, Bederson says the surgery is not as successful as he’d hoped it would be. Most of the tumor, which is very vascular and fibrous, is located up high in the patient’s brain, and will likely require a more invasive craniotomy.

Looking to the future

Bederson and the Mount Sinai network are on the cutting edge when it comes to surgical technology, and Mount Sinai’s neurosurgery department is also one of the first hospitals to take things to the next level by using the new KINEVO 900 neurosurgical visualization microscope from Zeiss.

The device – which Bederson tested last October and two of which were purchased with the help of a donation from a former patient – feeds optical, navigation and simulation information into a 55-inch 4K 3-D monitor.

“Instead of looking through eyepieces, I’m looking at the video image obtained by the KINEVO,” Bederson explains. “The quality of the heads-up display information that’s injected into the eyepieces is limited because the eyepieces are optimized for optical information provided by the microscope. They’re not well suited for digital information. The information is pixelated and it’s really not as high-quality as it could be. So, we’re actually dumbing down the quality of the digital information to facilitate microscope integration.

“Now, because everything’s a video feed, we can increase the quality of that digital information.”

The KINEVO 900 also allows for surgeon-controlled robotic movement of the microscope, so instead of Bederson having to stop what he’s doing and move the microscope, he can identify a point in the patient and the microscope will only move in relation to that focal point.

Going forward, there will also be image-controlled robotic movement.

“I can identify areas in the Brainlab images that I want to navigate to or stay away from, and we’ll program the microscope to take me through the sequence of places that I’ve identified preoperatively,” Bederson says. “[We] … can either use what we’re seeing through the video feed or we can look at tractography from MRI scans. We can look at cranial nerves. We can say navigate away from this blood vessel that we can’t see yet, but we don’t want to get too close to it. Presumably, we’re going to get more control of what we do based on additional information.”

The ultimate goal of the technology – an advanced airplane, so to speak – is to make Bederson’s job less daunting.

“What we want to do is make it easier for the surgeon, to reduce the workload and bring some of this navigation and simulated information into the field of view in a way that allows us to keep flying the plane while getting that information,” Bederson says. “That’s really the next step of integration.”